Born or Built - Our Robotic Future Exhibition

Wow what an exciting end to the year we had at emergeWorlds. There was a bit of everything: some overseas travelling, developing virtual reality games and taking learning experiences to a whole new level. It feels as though VR, AR and new technology are starting to gain more and more interest from the general public. It’s going to be a big 2019.

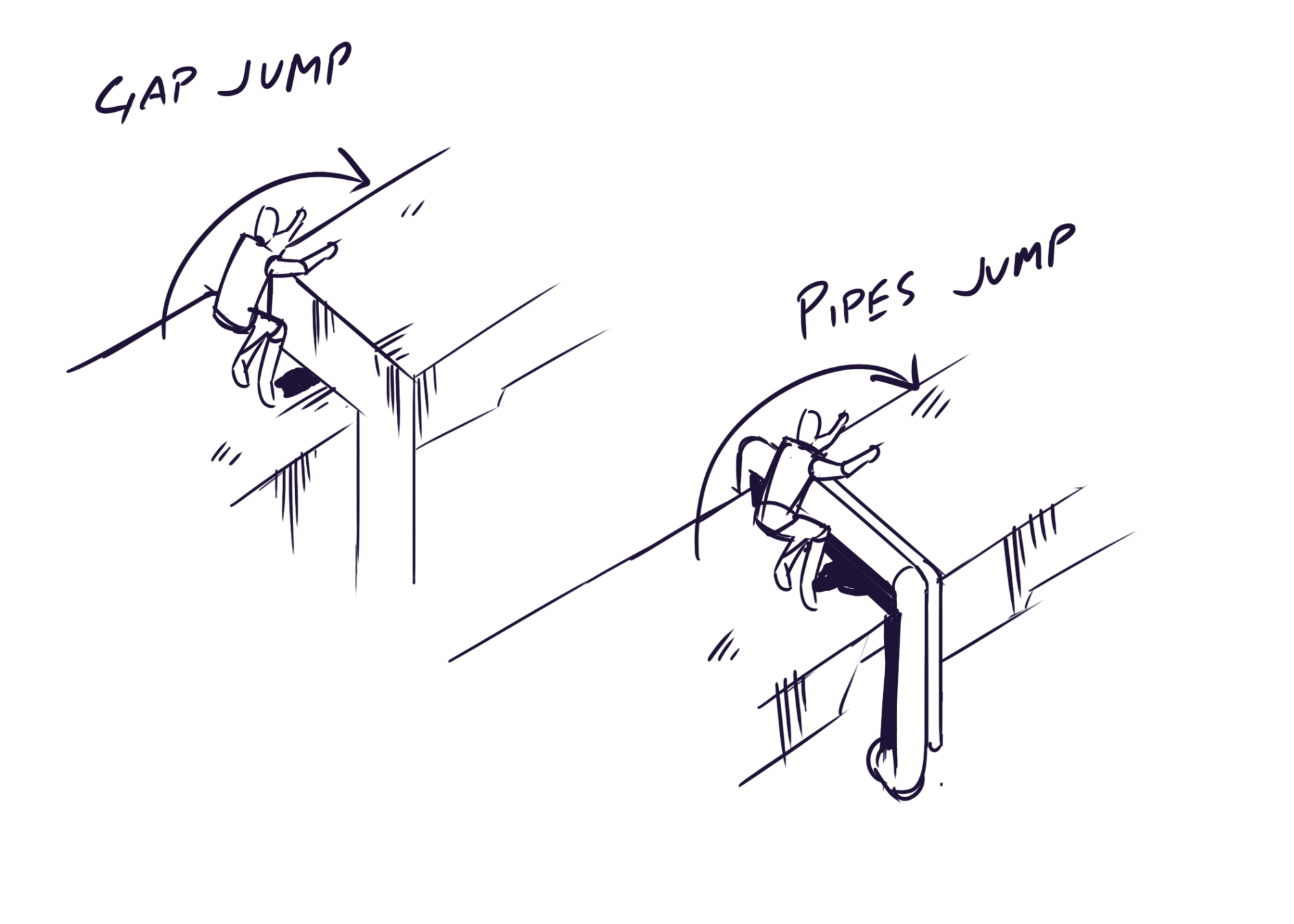

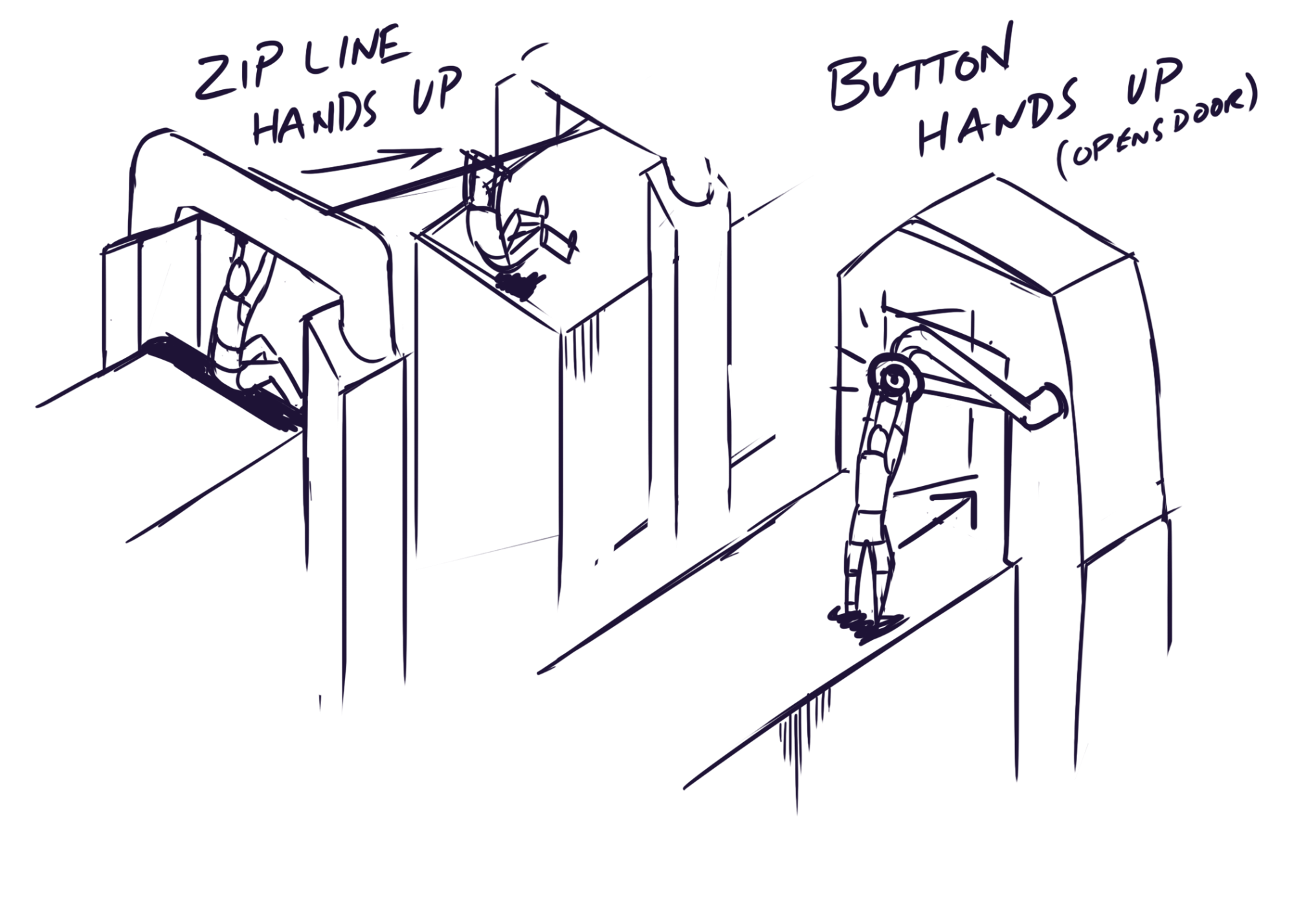

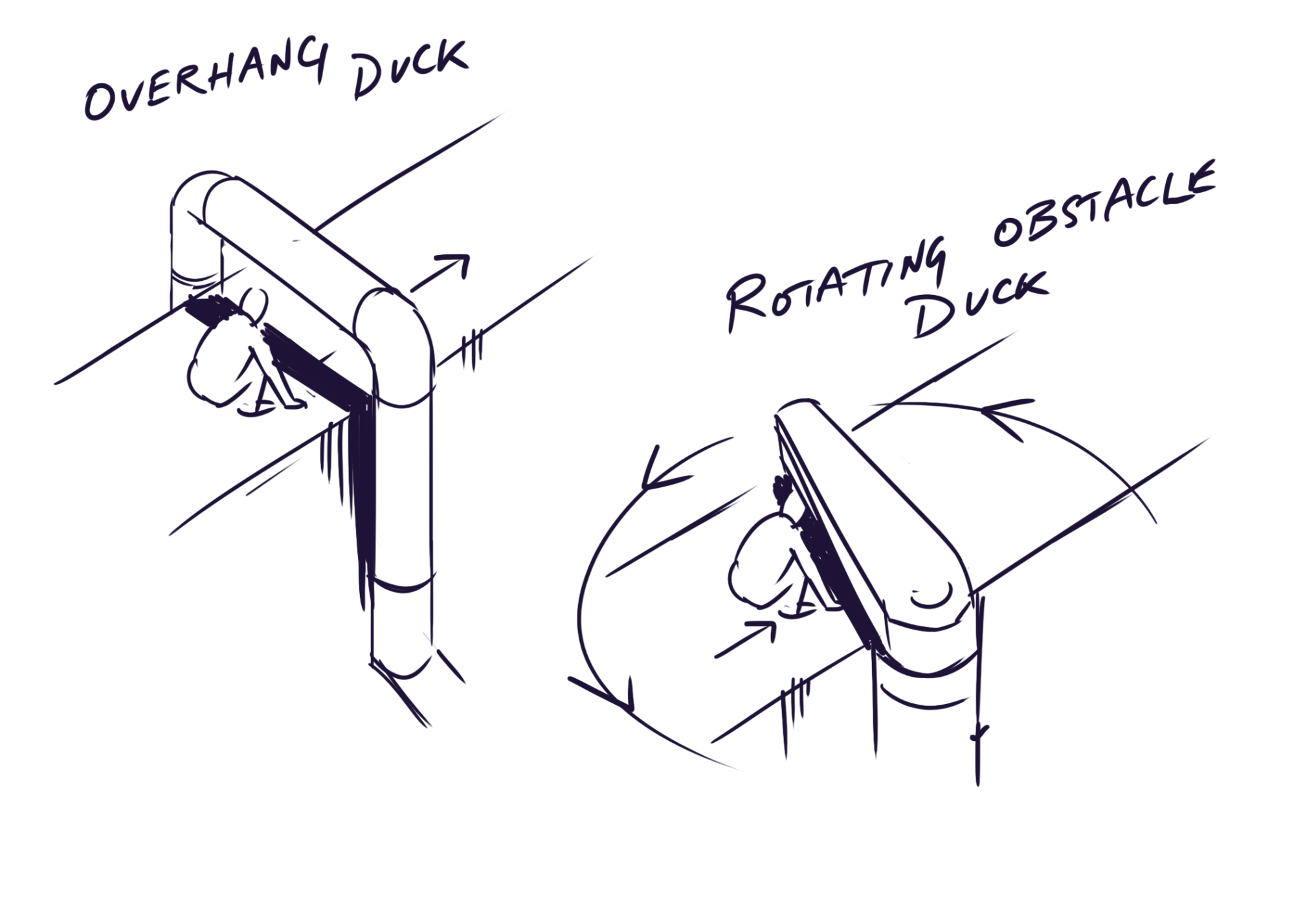

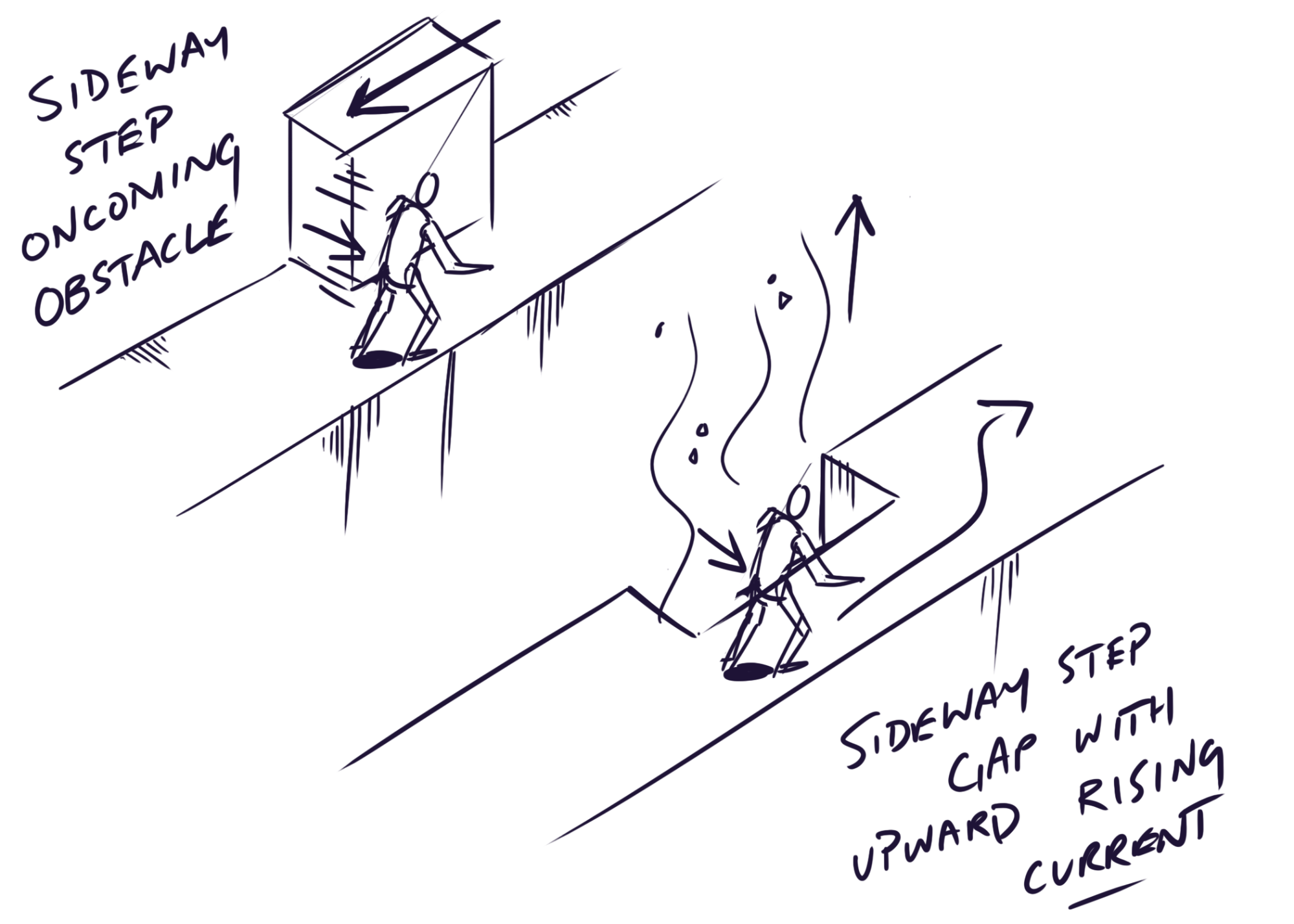

Concept Frames

Ideas & Obstacles

Born or Built - Our Robotic Future Exhibition

Talk about a cool project! We were very lucky to be asked by Questacon the national science and technology centre to create a game and learning experience that kids around Australia will love. The purpose of the project was to demonstrate machine learning in a simple way to children.

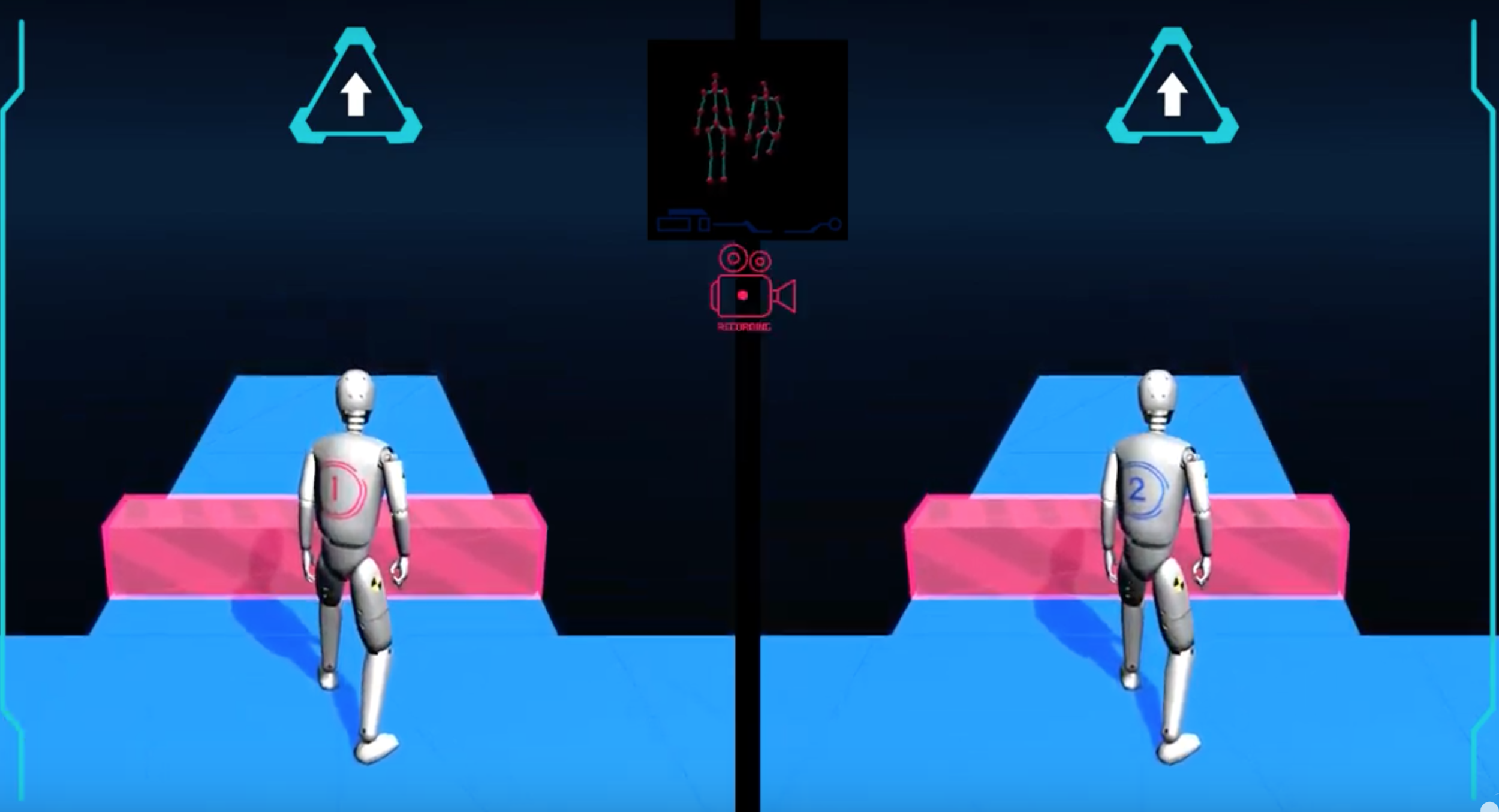

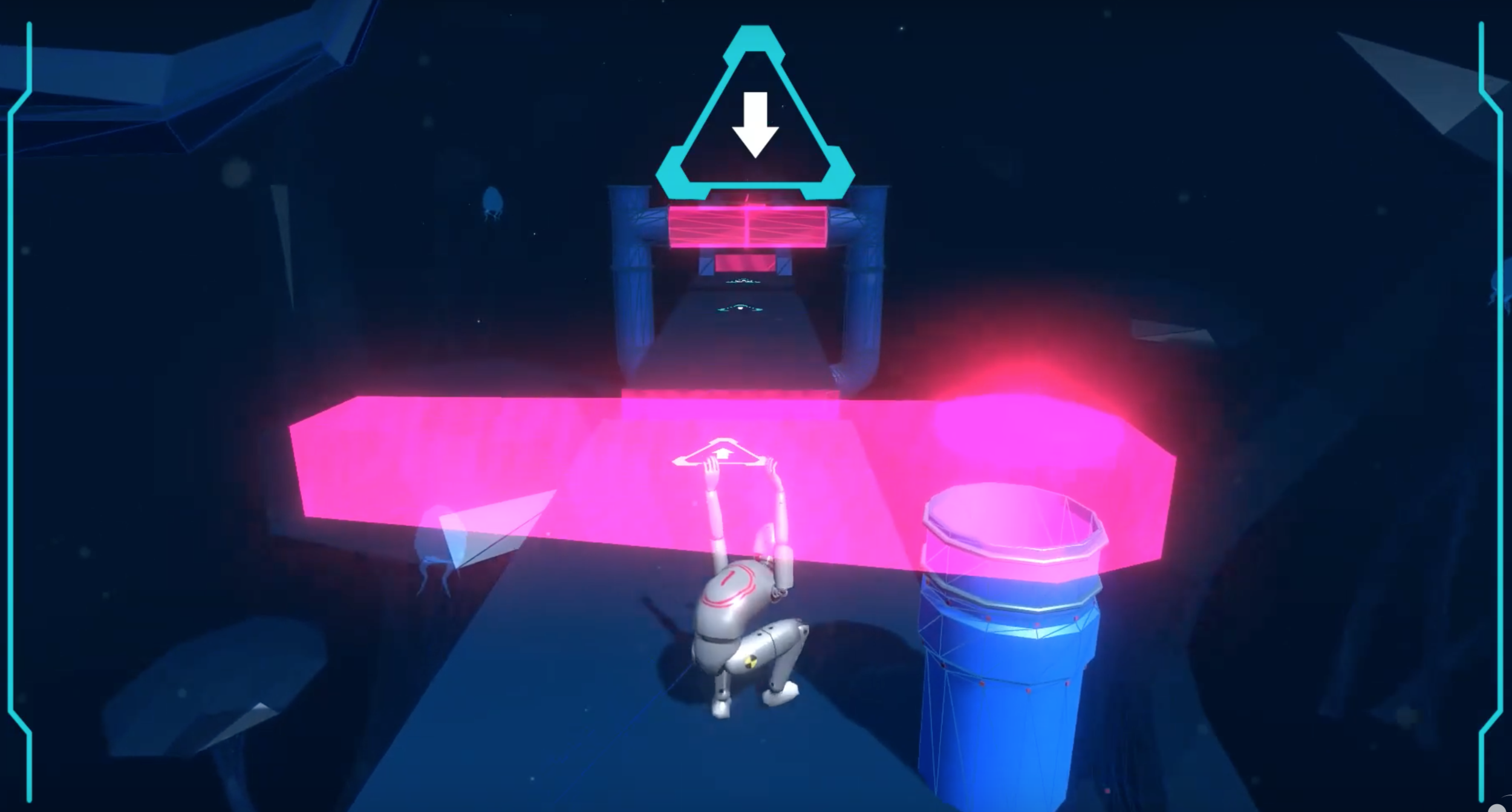

It’s a motion capture multiplayer experience where the player records actions like jumping, ducking and sidestepping to guide a Virtual Ragdoll through an obstacle course. Think action like the TV shows Wipeout or Takeshi’s Castle, that’s kinda what we were going for. If the player records the wrong actions then their Virtual Ragdoll can get seriously hurt and smashed by the obstacles in the course.

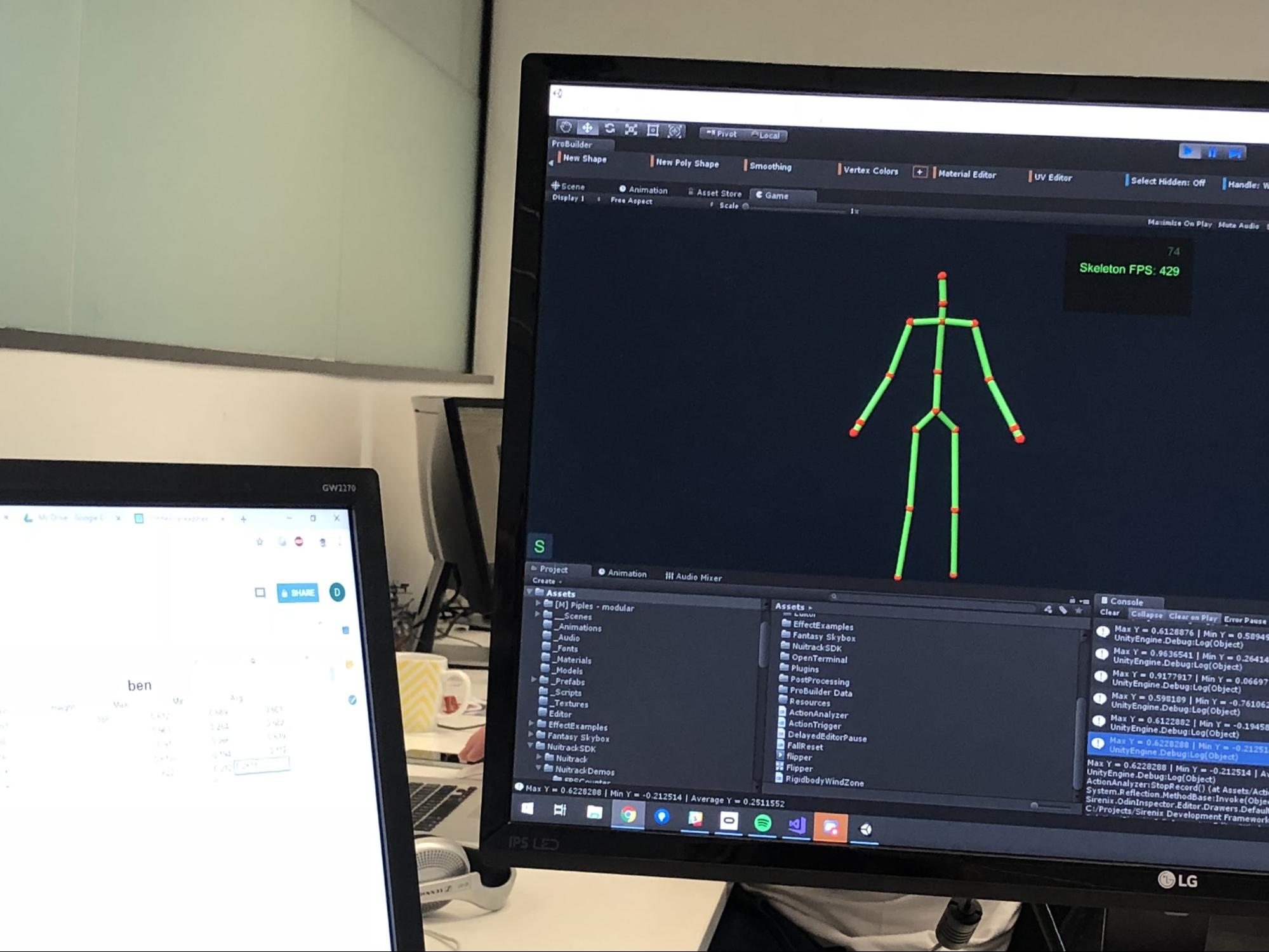

We have a lot of experience recording motion capture data and actions. In our Vr game Dance Collider we motion captured our dancer Shane and placed all the moves on our characters in the game. The Ragdoll project was a different challenge though as we would have to live motion capture actions and not rely on any post production to tidy up the actions. The game was created to be as seamless as possible where the player first records their actions before the Ragdoll takes off through the obstacle course.

Through concepting we had a lot of discussion about what camera to use. We decided the best option was to use the intel real-sense depth cameras to record the player actions. We had used the Kinect V2 cameras previously with Dance Collider but because they no longer make them we needed to use a camera that will be supported going forward. There were workarounds we implemented to make the real sense camera somewhat stable. At this stage it is not as stable as the Kinect V2 sensor.

Intel

Real Sense Depth Camera

After setting up the camera we spent a lot of time researching the variables that would affect our motion recording. We measured ideal lengths from the camera, light sources, player heights and weights, ideal height and angle of the camera, and many other variables.

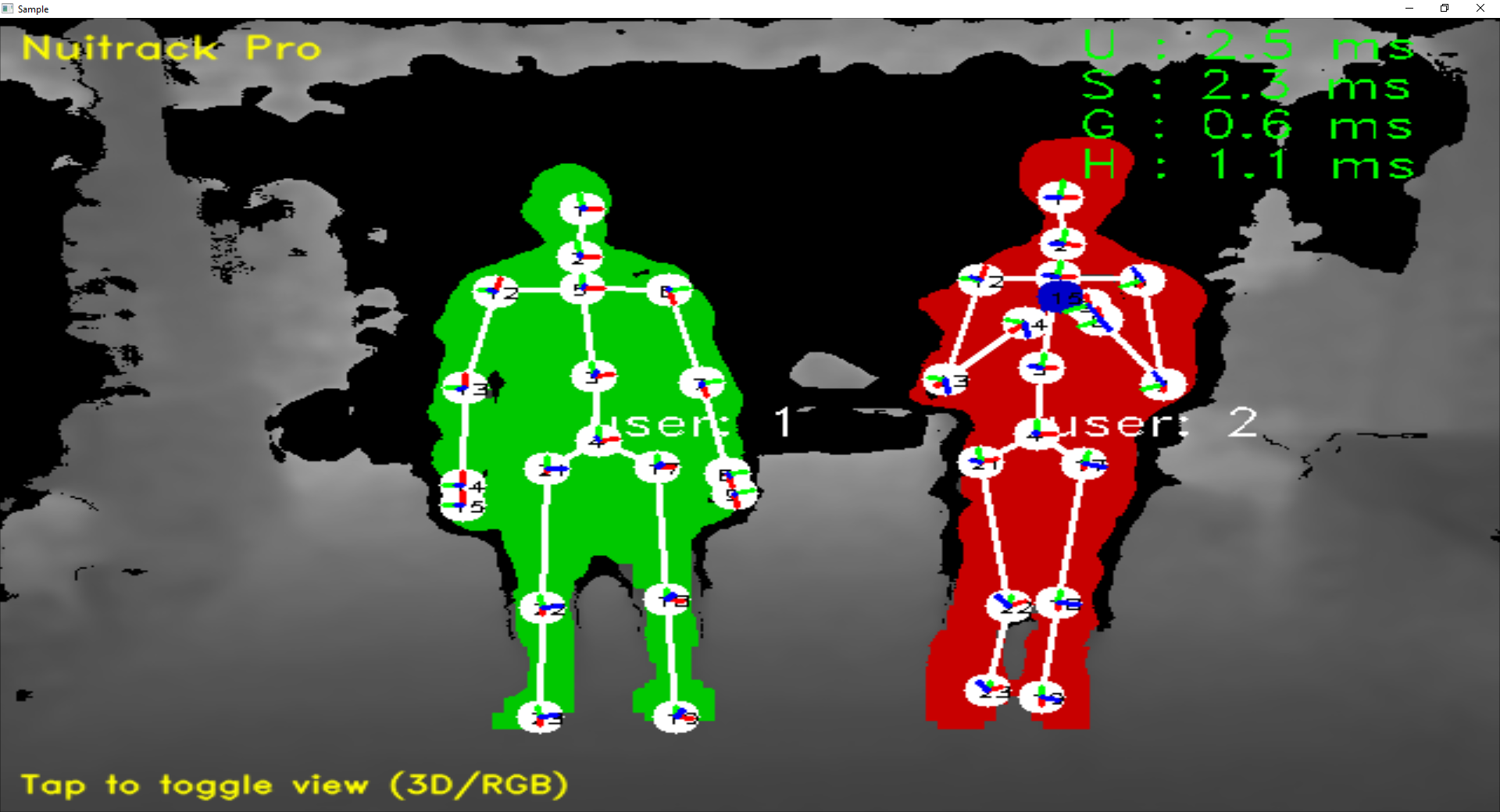

During the recording of actions we motion capture jumping, ducking, side stepping, hitting and reaching up. Some of these are tough actions to motion capture as the skeleton can drop out because of occlusion. It took a lot of testing to figure out the ideal distance and angle of the camera so we could capture these actions with the least amount of occlusion from the body.

Another hurdle was figuring out the best distance to record the actions. If the player was too close then the actions couldn’t be captured properly because they wouldn’t be in the capture frame. The same went for too far away. The camera doesn’t have enough precision or fidelity to capture skeletal data at a great distance. So there is definitely a sweet spot with both height and distance of the camera to allow for all body sizes and heights to be best captured.

The experience had to work for adults and children and there was a wide variety of body types we had to take into consideration. We found if the child was skinny then it was quite hard to track their data because it would get lost. Additionally it was observed that clothes also become a factor in how well the camera can capture someones limbs. For example, when our 7 year old girl relaxed her hands down by her side they blended with her dress and lost tracking.

For the players we needed to assume a broad range of clothes, hair-types and accessories and this would further limit the ability to track and capture separate limbs in any significant way. We developed a method to track main data points when tracking dropped out on the limbs. The most stable parts of the body were the spine and shoulders. By measuring distance and rotation we could track our players movements from a few reliable points. Again this was only used when the players data dropped out on their limbs.

The Virtual RagDoll experience is very fun to play, interactive and turned out like we had imagined. We think children especially will love it and can’t wait to see them have a play. It is part of the Born or Built - Our Robotic Future exhibition which opens in March 2019. There will be a full experiential booth and display set up for the exhibition. It will roll out in Questacon, then travel around Australia.